Book Review

Acemoglu D & Robinson JA (2013) Why Nations Fail. The Origins of Power, Prosperity and Poverty Profile Books

A satellite photograph of Korea at night shows North Korea as dark as – well – night, whilst South Korea blazes forth with light pollution. The South is the 29th richest country in the world with a GDP of $37,000 per head. The North is one of the poorest ($1,800 GDP per head) suffering from periodic famine and desperate poverty. Why is this?

One easy answer is that the North is a dictatorship whereas the South is a democracy. Democracies are good; dictatorships are bad.

It is not so simple.

At the end of WWII Korea was divided between North and South at the 38th parallel. In 1950 the North invaded the South and almost succeeded in overrunning it. At the end of the Korean War (1953) the states were again divided, but both were dictatorships. The South’s GDP increased at 10% per year between 1962 and 1979. It only became a democracy in 1987 with separate executive, legislative and judicial bodies after a succession of 3 dictators (two by coups d’état, one of whom was assassinated).

Figuring out what are the vital factors and what drives the changes occurring in a society is difficult. There are no two identical societies in which one isolated factor can be changed to see what happens. Any theory is liable to have elements of pre-supposing the answer (for example: democracy versus dictatorship). So any theory about how and why some nations become tolerant and prosperous but others become intolerant and poverty stricken is likely to be controversial. Similar problems arise in trying to account for why formerly tolerant and prosperous nations reverse and become repressive and poor.

It is to this problem of why some nations succeed and some fail that Daron Acemoglu, a Turkish-American professor of economics at M.I.T., and James Robinson, a British professor of public policy studies at the University of Chicago (hereafter A&R) have tackled in their book Why Nations Fail: The Origins of Power, Prosperity and Poverty. This book has been generally well received.

I will outline some of the earlier theories and the criticisms by A&R and then their theory and some of the criticisms that have been levelled against it.

Geography

The map of the world shows affluent societies in the temperate areas and poor societies in the tropical areas within 30° of the equator. This is particularly marked in Africa. The idea then is that the great division between rich and poor countries is caused by geography. The reasons for this are the pervasiveness of tropical diseases such as malaria, the scarcity of animals that could be used as cheap labour, and the poverty of the soil. There are exceptions, for example, the rich countries of Singapore and Malaysia, but both of these have access to the sea. This allows trade because it is much cheaper to transport cargo by sea than by land.

A&R criticise this theory on several grounds despite its initial appeal. The Indus Valley civilisation is the first recorded great civilisation and it is situated in what is modern Pakistan, well within the tropics. Central America before the Spanish invasions was richer than the temperate zones. One of the world’s currently poorest nations, Mali (GDP $18 billion), where half of the population of 14.5 million live on less than $1.25 per day, was once ruled by the richest person who has ever lived. Mansa Musa Keita I (c. 1280 – c. 1337) had a fortune of $400 billion in today’s money. His wealth included vast quantities of gold, slaves, salt and a large navy(!).

“History … leaves little doubt that there is no simple connection between tropical location and economic success.” (A&R p51) There are also vast differences in wealth within the tropics and temperate regions at the present time. There is a sharp line between poverty and prosperity between North & South Korea, between Mexico and the United States, and between East and West Germany before reunification.

Ignorance

The reason poor nations are poor according to this hypothesis is that their governments are not educated in how a modern economy should be run. Their leaders have mistaken ideas on how to run their countries. Certainly, leaders of central African countries since independence have made bad decisions when viewed from outside. The IMF recommend a list of economic reforms that poor states should undertake including:

- reduction of the public sector,

- flexible exchange rates,

- privatization of state run enterprises,

- anticorruption measures and

- central bank independence.

The central bank of Zimbabwe became ‘independent’ in 1995. It was not long before inflation took off reaching 11 million % pa (officially) by 2008 with unemployment around 80%.

But according to A&R it is not ignorance that is the source of bad decisions: “Poor countries are poor because those who have power make choices that create poverty. They get it wrong not by mistake or ignorance but on purpose. To understand this, you have to go beyond economics and expert advice on the best thing to do and, instead, study how decisions actually get made, who gets to make them, and why those people decide to do what they do.” (p68)

Culture

One idea about the rise of Europe from the 17th century was that it was caused by the ‘protestant work ethic’. Alternatively, the relative prosperity of former British colonies like Australia, and the U.S.A. was caused by the superior British culture. Or perhaps it is just European culture that is better than the others. These smug ideas don’t hold much water when you look at China and Japan, or when you look at the conduct of the European powers in their colonies. Some of those colonies are now prosperous and some are not.

At the start of the Industrial Revolution in the 18th and 19th centuries Britain had relative stability. It had a tolerant clubby society that encouraged individualism. It protected invention through patents. It had a market for mass-produced goods. According to A&R this was not culturally caused. Rather it was the result of definite structures in society and political arrangements. (p56)

Modernisation

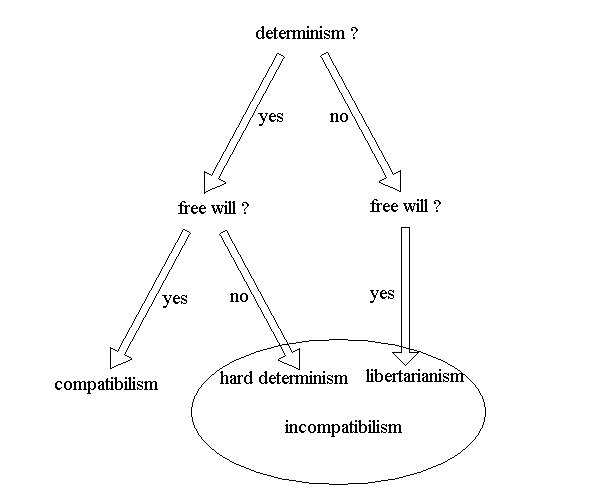

According to this theory (also known as the Lipset or Aristotle theory) when countries become more economically developed they head towards pluralism, civil liberties and democracy. There is some evidence that this holds in Africa since 1950. (Anyanwu & Erhijakpor 2013)

But A&R object that US trade with China has not (yet) brought democracy there. The population of Iraq was reasonably well educated before the US-led invasion, and it was believed to be a ripe ground for the development of democracy, but those hopes were dashed. The richness of Japan and Germany did not prevent the rise of militaristic regimes in the 1930’s. (p443)

Other Theories

There are other theories about where and when prosperity will arise or disappear.

Several experts discuss the cause of the Industrial Revolution in The Day the World Took Off (Dugan 2000). They surmise:

- historical accident (p182);

- capitalism (p135);

- the availability of raw materials (p66);

- consumerism (p64);

- the habit of drinking tea or beer rather than contaminated water (p18);

- the need to measure time (p100);

- the rise of the merchant classes (bourgeoisie) (p141).

Also discussed in The Day the World Took Off is the settlement in the Glorious Revolution of 1688 which brought political stability (p82). Finance became available through the establishment of the Bank of England. Incentives for investment, trade and innovation appeared through the enforcement of property rights and patents for intellectual property.

Acemoglu & Robinson

The political and economic factors exemplified by the Glorious Revolution are what A&R develop in their 500+ page book on why nations fail. A&R make several major points in their analysis.

1. Centralization

The first requirement for economic growth is a centralized political set up. Where a nation is split into factions, as is the case today in Somalia and Afghanistan, it is difficult to centralize power. This is because “any clan, group or politician attempting to centralize power in the state will also be centralizing power in their own hands, and this is likely to meet the ire of other clans, groups and individuals who would be the political losers of this process.”(p87) Only when one group of people is more powerful than the rest can centralization occur.

2. Extractive Economic Institutions

Economic institutions are critical for determining whether a country is poor or prosperous. A&R define extractive economic institutions as those “designed to extract incomes and wealth from one subset of society to benefit a different subset.” (p76) The feudal system that existed in Europe around 1400 and persisted in places into the 20th century was extractive. Wealth flowed upwards from the many serfs to the few lords. In later times, colonialism flowed wealth away from the locals to the colonists. A particular example was King Leopold II (1835-1909) of Belgium who ruled over the Congo Free State from 1885 to1908. He built his personal wealth through copper, ivory and rubber exports supervised by a repressive police force that enforced the local slave labour. A considerable but unknown proportion of the population were murdered or mutilated in the pursuit of Leopold’s wealth. (Bueno de Mesquita 2009)

Economic Growth can occur where there are extractive economic institutions provided there is centralization of power. It is in the interest of the exploiters to increase production for their own gain. A&R claim that this growth cannot continue for ever. It comes to an end “because of the absence of new innovations, because of political infighting generated by the desire to benefit from extraction, or because the nascent inclusive elements were conclusively reversed…” (p184) They thus predict that China’s growth will stall unless it manages somehow to transition to inclusive institutions (p442).

3. Extractive Political Institutions

Extractive economic institutions are set up by whoever it is that has political power. They will be better off if they can extract wealth from the rest of society and use that wealth to increase their power. “[They] have the resources to build their (private) armies and mercenaries, to buy their judges, and to rig their elections in order to remain in power. They also have every interest in defending the system. Therefore, extractive economic institutions create the platform for extractive political institutions to persist. Power is valuable in regimes with extractive political institutions, because power is unchecked and brings economic riches.” (p343)

4. Inclusive Political Institutions

Political institutions that distribute power broadly in society and subject it to constraints are pluralistic. Inclusive political institutions are those that “are sufficiently centralized and pluralistic.” (p81)

This agrees with the American political scientist Bruce Bueno de Mesquita that one of the main factors in having benevolent government is the presence of a large coalition (which he calls the ‘selectorate’) of those who have a say in who rules.(Bueno de Mesquita 2009)

The Glorious Revolution in Britain of 1688 limited the power of the king and gave parliament the power to determine economic institutions. It opened up the political system to a broad cross section of society. They were able to exert considerable influence over the way the state functioned.

Before 1688 the king had a ‘divine right’ to rule the state by law. Afterwards even the king was subject to the Rule of Law. “[The Rule of Law] is a creation of pluralist political institutions and of the broad coalitions that support such pluralism. It is only when many individuals and groups have a say in decisions, and the political power to have a seat at the table, that the idea that they should all be treated fairly starts making sense.” (p306)

Britain stopped censoring the media after 1688. Property rights were protected. Even ‘intellectual property’ was protected through patents, which enabled innovators and entrepreneurs to gain financially from their ideas. According to A&R it is no accident that the Industrial Revolution followed a few decades after the Glorious Revolution. (p102)

‘Inclusive Political Institutions’ is not the same as democracy. Great Britain after the Glorious Revolution was not a democracy in the modern sense. The franchise was limited and with disproportionate representation. For instance, the constituency of Old Sarum in Wiltshire had 3 houses, 7 voters and 2 MPs. Not until 1832 did the franchise extend to 1 in 5 of the male population. Only in 1928 did all women get the vote. Similarly, the prosperous nation, the United States, did not grant franchise to ‘all’ males until 1868, to ‘all’ females until 1920 and all African Americans until 1965.

There are many examples of countries where democratic voting occurs but few political institutions of an inclusive nature, if any, exist. There ‘democracy’ tends to be a conflict between rival extractive institutions.

According to A&R the reason the Middle East is largely poor is not geography. It is the expansion and consolidation of the Ottoman Empire and its institutional legacy that keeps the Middle East poor. The extractive institutions established under that regime persist to the present day. It is just different people running them.

5. Inclusive Economic institutions

“Inclusive economic institutions … are those that allow and encourage participation by the great mass of people in economic activities that make best use of their talents and skills and that enable individuals to make the choices they wish. To be inclusive, economic institutions must feature secure private property, an unbiased system of law, and a provision of public services that provides a level playing field in which people can exchange and contract; it must also permit the entry of new businesses and allow people to choose their careers.” (p74)

These features of society all rely on the state. It alone can impose the law, enforce contracts and provide the infrastructure whereby economic activity can flourish. The state must provide incentives for parents to educate their children, and find the money to build, finance and support schools.

Economic growth and technological change is what makes human societies prosperous. But this entails what the Austrian-American economist Joseph Schumpeter called ‘creative destruction’. This term describes the process whereby innovative entrepreneurs create economic growth even whilst it endangers or destroys established companies. “[The] process of Creative Destruction is the essential fact about capitalism. It is what capitalism consists in and what every capitalist concern has got to live in.” (Schumpeter 1942)

A&R opine that the fear of creative destruction is often the reason for opposition to inclusive institutions. “Growth… moves forward only if it is not blocked by the economic losers who anticipate that their economic privileges will be lost and by the political losers who fear that their political power will be eroded.” (p86) Opposition to ‘progress’ comes from protecting jobs or income, or protecting the status quo.

“The central thesis of this book is that economic growth and prosperity are associated with inclusive economic and political institutions, while extractive institutions typically lead to stagnation and poverty. But this implies neither that extractive institutions can never generate growth nor that all extractive institutions are created equal.” (p91)

7. Critical Junctures

A critical juncture is when some “major event or combination of factors disrupts the existing balance of political or economic power in a nation.” (p106) Similar events such as colonization or decolonization have affected many different nations, but what happens to the society at such critical junctures depends on small institutional differences.

100 years before the Glorious Revolution Britain was ruled by an absolute monarch (Elizabeth I). Spain was ruled by Philip II and France by Henry III. There was not much difference in their powers, except that Elizabeth had to raise money through parliament. Henry and Philip were able to monopolize transAtlantic ‘trade’ for their own benefit. Elizabeth could not because much of the English trade was by privateers, who resented authority. It was these wealthy merchant classes who played a major role in the English Civil War and the Glorious Revolution.

“Once a critical juncture happens, the small differences that matter are the initial institutional differences that put in motion very different responses. This is the reason why the relatively small institutional difference led to fundamentally different development paths. The paths resulted from the critical juncture created by the economic opportunities presented to Europeans by Atlantic trade.” (p107)

________________

Criticisms

One of the difficulties with political and social theory is that once a formula has been hit upon, everything then becomes interpreted in the light of that formula. Once Marx had explained economics in terms of labour and its exploitation, there was no room for those who espoused that idea to see anything different. So extractive versus inclusive institutions could be just another seductive idea.

1. Economists Michele Boldrin, David Levine and Salvatore Modica make a similar point in their review (Boldrin, Levine & Modica 2012). They say that if we lack an axiomatic definition of what is ‘inclusive’ and what is ‘extractive’, independent of actual outcomes, then the argument becomes circular and subject to a selection bias. Some of A&R’s examples are “a bit strained”.

For example, after Julius Caesar established the ‘extractive empire’ the ‘fall of Rome’ did not occur for four centuries. The success of South Korea, Taiwan and Chile (which had non-inclusive political institutions but evolved into inclusive ones) might lead one to suppose that “pluralism is the consequence rather than the cause of economic success.” (The Anyanwu study mentioned above in connection with the modernisation theory did find a correlation between economic success with democracy in Africa. But they also found that the extent of oil reserves in the country tended to stop the development of democracy. This is what you would expect from A&R. I think there are cross-causative factors. The rise of the merchant classes in England was a major factor in the development of English politics as A&R show).

In the case of Italy the political institutions are the same in the North and the South. But the North is prosperous whereas the south is dependent on handouts from the North. BL&M acknowledge that the south suffers from economic exploitation (Mafia) but this suggests that political institutions are only part of the story since there is no national border. They also say there is a danger in using satellite photographs as economic evidence as in this particular case “the poorest part of Italy is the most brightly lit.” The apparent brightness of parts of photographs depends on several factors including the curvature of the Earth and where the satellite is with respect to the subject. The picture of Italy here shows the north: the Po Valley as the brightest lit.

Germany from the mid 19th century until the end of WW2 prospered under extractive institutions, and led the world in its chemical industry. It did have compulsory education and social insurance and an efficient bureaucracy, but it could hardly be thought of as inclusive. Nazi Germany invented and produced the first jet planes and rockets. The “brief period of inclusiveness, the Weimar Republic, was an economic catastrophe.”

Again, the Soviet Union “did well under extractive communist institutions,” but floundered after a coup d’état established inclusive political institutions.

According to BL&M, Zimbabwe is a disastrous case of moving towards more inclusive institutions by extending the franchise to a wider population and lifting trade restrictions. (I find it difficult to believe that Zimbabwe can be regarded as consisting of inclusive political and economic institutions).

BL&M suggest that the focus of A&R is on what happens within nations when a great many developments within nations depend on what happens between nations. Not the least of these developments being invasions and war. BL&M perceive that many historical crises, including the current crisis in Greece, stem from debt, yet A&R do not mention this. The French Revolution and the rise of Nazism came from debt crises, as did the English Civil War.

BL&M argue against A&R’s stance that intellectual property rights brought in after the Glorious Revolution was one of the spurs for the Industrial Revolution. They show that patents were barriers to progress. They are passionate advocates of liberalizing copyright, trademark and patent laws which they see as the enemy of competition and ‘creative destruction’. (Boldrin & Levine 2008) I have sympathy with this view, but that’s a different story for another day. (see also Hargreaves 2011).

What BL&M’s cases seem to suggest is that we need stricter criteria for ‘inclusive’ and ‘extractive’. These nations were inclusive in some respects and extractive in others. It is difficult to decide which were or are the most pertinent factors.

A further complication is the passage of time. How long before an ‘inclusive’ or ‘extractive’ feature starts to make a change to the society? A&R do not suggest that prosperity manifests immediately or immediately disappears when a society transitions from one to the other.

2. One of the principal proponents of the geography theory is Jared Diamond, a professor of Geography at the University of California, Los Angeles. He acknowledges that inclusive institutions are an important factor (perhaps 50%) in determining prosperity but not the overwhelming factor (Diamond 2012). He favours historically long periods of central government and geography as major factors. He also makes the point that why each of us as individuals becomes richer or poorer depends on several factors. These include “inheritance, education, ambition, talent, health, personal connections, opportunities and luck…” So there is no simple answer to why nations become richer or poorer.

3. William Easterly, a professor of economics at New York University, complains that A&R have “dumbed down the material too much” by writing for a general audience. They rely on anecdotes rather than rigorous statistical evidence (when “the authors’ academic work is based on just such evidence” ). So the book “only illustrates the authors’ theories rather than proving them.”

Conclusions

All three of these critical reviews acknowledge that Why Nations Fail is a great book. It should be read by anyone with an interest in politics.

Apart from the central thesis outlined above A&R provide many examples and great historical detail. This alone makes it a good read, even if you have philosophical aversions to the conclusions.

There is no simple solution to the problem of failed states but at least a correct diagnosis might lead to a greater percentage of success. Such explanations as ‘geography’, ‘culture’ and ‘historical accident’ do not offer much hope. Imposing ‘democracy’ on states that are anarchic or repressive does not seem to have worked so far, though it might form part of a solution once the system that has kept the nation repressed has been remedied.

You might think that the people who are in charge of states that extract wealth from their populations and gather power to themselves are psychopaths. They probably are. But it is usually the system that has existed for a considerable time, or is easily adapted to this end, that exists before the person takes power. The system tends to persist longer than any individual. There are more than enough psychopaths around to engineer a revolution or coup that puts them in charge when they see the advantages that may accrue. So getting rid of a dictator is only likely to replace him with another one. Where it does not, the likely consequence is the de-centralisation of the state with warring factions.

A&R make the point that “avoiding the worst mistakes is as important as – and more realistic than – attempting to develop simple solutions.“(p437)

References

Acemoglu D & Robinson JA (2013) Why Nations Fail. The Origins of Power, Prosperity and Poverty Profile Books

Anyanwu JC & Erhijakpor AEO (2013) Does Oil Wealth Affect Democracy in Africa? African Development Bank available at http://www.afdb.org/fileadmin/uploads/afdb/Documents/Publications/Working_Paper_184_-_Does_Oil_Wealth_Affect_Democracy_in_Africa.pdf

Boldrin M and Levine DK (2008) Against Intellectual Monopoly, Cambridge University Press. available at http://www.micheleboldrin.com/research/aim/anew01.pdf

Boldrin M, Levine D & Modica S (2012) A Review of Acemoglu and Robinson’s Why Nations Fail available at http://www.dklevine.com/general/aandrreview.pdf

Bueno de Mesquita B (2009) Predictioneer The Bodley Head (published in the USA as ‘The Predictioneer’s Game’ )

Hargreaves I (2011) Digital Opportunity. A Review of Intellectual Property and Growth (report commissioned by UK government) available at https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/32563/ipreview-finalreport.pdf

Schumpeter J A (1942) Capitalism, Socialism and Democracy Harper & Brothers

Taylor F (2013) The Downfall of Money: Germany’s Hyperinflation and the Destruction of the Middle Class Bloomsbury

[amazon_link asins=’B007JYFHVM,B007KNSB24,B008VTB6TQ,0307719227,081297977X,0061561614,1620402378′ template=’ProductCarousel’ store=’retthemin-21′ marketplace=’US’ link_id=’d6c42bfd-4550-11e7-b992-e7ad073bed39′]