by Michael Davidson

(response to the article Machine Consciousness: Fact or Fiction? by Igor Aleksander available at http://footnote1.com/machine-consciousness-fact-or-fiction )

The eminent AI researcher Igor Aleksander tackles the question as to whether a machine could be conscious in the above article posted in February 2014.

Aleksander assumes that being conscious is an advantage to an organism such as a human being. Consciousness therefore has a function.

This is a step forward from some philosophical positions:

a) consciousness has no function (epiphenomenalism – originating with Thomas Huxley in the 19th century [Huxley 1912] and espoused typically by some neuroscientists and psychologists today [Soon et al 2008; Wenger & Wheatley 1999]).

b) “psychology must discard all reference to consciousness… [must] never use the terms consciousness, mental states, mind, content, introspectively verifiable, imagery and the like.” [psychological behaviourism – Watson 1913].

c) any statement involving mind words (such as consciousness, intentions) can be paraphrased without any loss of meaning into a statement about what behaviour would result if the person considered happened to be in a certain situation. [philosophical behaviourism – Ryle 1949].

d) consciousness does not exist [Eliminative Materialism eg Churchland 1981].

e) the self does not exist [New Scientist 23 Feb 2013] see my response here.

Aleksander believes that consciousness can be understood in ‘scientific’ terms, though what he means by ‘scientific’ is not explained (that would require several thousand words on its own and is not a subject devoid of controversy itself). I suspect when he says ‘scientific’ he means physicalist since the core – metaphysical – assumption of most AI is that all things can ultimately be explained through the theories of physics and computation.

It is Aleksander’s contention that it is not possible to know that people other than ourselves are conscious because we have no tests to tell us, we simply believe they are because they are human. (Or is it that we only believe they are ‘human’?). ‘Non-scientific’ ordinary humans simply believe things based upon assumptions. He invites us to believe that ‘tests’ – by which I presume he means ‘scientific’ tests – could confer upon us some kind of certainty and proof.

This seems to miss the important point that scientific theories are built up from observations by humans. A theory is not necessarily true; it is just the best idea we have which

1) explains the observations leading to some understanding

2) predicts new observations that can be tested and may be found to be true (it’s the observations that are true not necessarily the theory) and

3) enables us to (ultimately) control the phenomena in question.

The observations are repeatable by anyone who treads the same path of learning. Thus we can build jumbo jets and hadron colliders.

Aleksander quotes from the report of a conference of neurologists, computer scientists and philosophers on machine consciousness (Swartz Foundation 2001): “We know of no fundamental law or principle operating in this universe that forbids the existence of subjective feelings in artefacts designed or evolved by humans.” May be so, but we don’t know of any fundamental law that allows it either. Nor do we know, if it be allowed, how consciousness manifests from the material of brains. Metaphysics creeps easily into science at the fringes.

We have had several revolutions in science when theories that seemed to explain everything in their sphere of enquiry were found to be flawed. The classic example is Newton’s ‘Laws’ which were held to be metaphysically ‘true’ for 250 years. Newton’s Laws could not be ‘proved’ but we certainly believed them then – and we believe them now in the context for which they were formulated. Newton’s metaphysical assumptions (absolute space and time ‘flowing’ evenly) and the metaphysical conclusions derived from his works (the ‘clockwork universe’) have been overturned.

The idea that humans are conscious can certainly be subject to qualitative tests. Apart from the obvious observations of whether a person is asleep or awake, we can see to some degree whether a person is alert and aware of his or her surroundings. The theory that that person is conscious explains his or her bearing and demeanour, enables us to enter meaningful conversation, enables us to discuss what we simultaneously perceive or simultaneously imagine, cooperate in joint ventures and so on. It is difficult to imagine how science could be conducted if ‘scientists’ were not conscious.

Evidently most people do not doubt their own consciousness and if there are indeed no means to determine whether other people are conscious it is only a small step from there to solipsism – the view that the world and other minds exist only in one’s own mind. This is contrary to the physicalist position that only physical things exist.

Aleksander’s first step is to define consciousness (a notoriously difficult task). You can exclude too much or include too much. You can limit it to simply the difference between a person who is conscious and one who is unconscious, concentrating on anaesthetics and their antidotes. Or you can broaden it to personal identity – the self – moral intuitions and even ‘cosmic consciousness’. You can also divide the concept in various ways –

‘phenomenal consciousness’ (awareness of here and now) and social consciousness (which presupposes concepts, abstraction and language) [Guzeldere 1995 ] or

‘core consciousness’ (awareness of here and now) and ‘extended consciousness’ (an elaborate sense of self) [Damasio 1999] or

‘access consciousness’ (the contents of the mind accessible to thought, speech and action) and phenomenal consciousness’ (what it is like to be me) [Bloch 1994]

(‘What it is like’, which I also allude to in my title, is a reference to the seminal article by Thomas Nagel “What is it like to be a bat?” [Nagel 1974])

Here is Aleksander’s definition:

consciousness is a “collection of mental states and capabilities that include:

1. a feeling of presence within an external world,

2. the ability to remember previous experiences accurately, or even imagine events that have not happened,

3. the ability to decide where to direct my focus,

4. knowledge of the options open to me in the future, and

5. the capacity to decide what actions to take.”

If a machine endowed with language could report similar sensations Aleksander reckons then we would have as much reason to assume that it is conscious as we have with another human being. I suspect the five points are framed in this way as to allow the construction of an artefact that could be said to be ‘conscious’, thus satisfying the metaphysical first principle that humans are machines.

Aleksander has built a machine ‘VisAw’ which he claims satisfy points 1, 2 and 3.

The machine is a set of artificial neural networks with the inputs and outputs displayed on a computer screen. There is a picture of the screen in the referenced article.

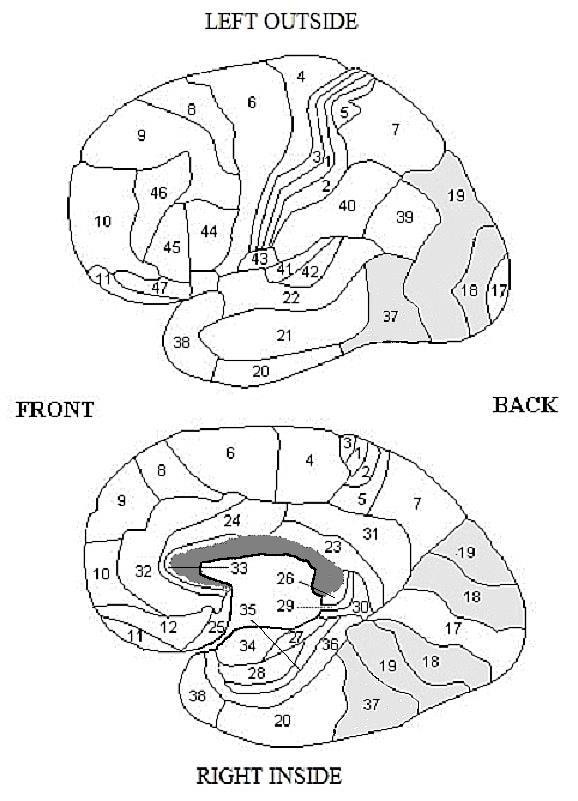

VisAw is based on the architecture of the part of the human brain called the extrastriate cortex – ie Brodmann areas 18, 19 and 37. The cortex was studied microscopically by K Brodmann (1868-1916) in 1909 and various areas were found to have different structures now given numbers. The various functions of the cortex are only loosely related to the Brodmann areas. The parts of the cortex involved in vision are labeled V1, V2, V3, V4 and V5. The striate cortex (V1 located in B17) is so named because it is striped or striated.

(The two hemispheres of the brain are similar)

Simplistically and briefly, V1 maps to the retina and detects edges; V2 ‘fills in’ illusory edges; V3 is concerned with motion; V4 is concerned with spatial frequency, shape and colour and is affected by attention; V5 is concerned with the perception of motion and the guiding of some eye movements (the ‘picture’ of our surroundings is built up from many snapshots from the central sensitive area of the retina). (Damage to one or other of these areas of the brain results in a characteristic neurological disorder such as not being able to perceive motion).

VisAw models V3, V4 and V5 at least to some extent.

Let’s look at Aleksander’s points more closely:

1) a feeling of presence within an external world.

Applying this to humans, most people will certainly regard this as true for themselves, but Aleksander asks how do we know this for others? One test is to ask them whether they feel they are in an external world. A reasonable test you might think, but not one that is 100% reliable. The following simple program can do this but few would consider the answer as reflecting the internal feeling.

INPUT x

IF x = “Do you feel you are present in an external world?” THEN

OUTPUT “of course”You might think this example is too ridiculous for anyone to be taken in by it, but many simple conversational programs have done so (eg ELIZA [Weizenbaum 1976] fooled some people into revealing intimate secrets and some practicing psychiatrists believed that ELIZA could develop into an automatic form of psychotherapy). It is only when the mechanism of how the program functions is revealed that the magic disappears. Here’s an example:

Person: “My dog’s mother died recently.”

ELIZA: Tell me more about your mother

(it doesn’t recognise anything in the person’s statement apart from the word ‘mother’)Aleksander contends that by suitable manipulation “the content of a machine’s ‘mind’ can be made transparent and observable, [so] attributing consciousness to it may be no more outlandish than attributing consciousness to a living creature.” Obviously my little program would fail this test. In the case of a neural network the workings are relatively obscure since they do not depend on rules written by a programmer but they are not necessarily any more magical. The display of a message “I am imagining” does not presuppose any ‘I’ attached. If we expose the workings of VisAw it is not clear that there are any feelings connected to it. So it is difficult to see how we would know that the machine has any feeling at all. Leibniz made the same point: “Perception and that which depends upon it are inexplicable on mechanical grounds, that is to say, by means of figures and motions. And supposing there were a machine, so constructed as to think, feel, and have perception, it might be conceived as increased in size, while keeping the same proportions, so that one might go into it as into a mill. That being so, we should, on examining its interior, find only parts which work one upon another, and never anything by which to explain a perception.” (Liebniz 1714)

From what I can tell of VisAw it seems to ‘remember’ and ‘recognise’ two dimensional pictures of human faces. Humans and other living creatures exist in three dimensions. According to the psychologist JJ Gibson perception depends on the creature being able to move in the real world and so build up a three dimensional model of the world in which it is located and moves, rather than a world which rotates around the creature, as if on a TV screen.[Gibson 1986]

2. the ability to remember previous experiences accurately, or even imagine events that have not happened.

It could be argued that a DVD recorder has the ability to remember audio visual experiences but it seems unlikely that such a machine would be conscious. Thus displaying the contents of VisAw’s memory does not illustrate consciousness. Nor does the recombination of several parts of previous experiences demonstrate imagination, I would contend. Imagination is creative not merely reconstructive. A display on the computer screen saying “I am imagining” does not constitute imagining.

On the other hand humans don’t remember accurately anyway. A robot that was similar cognitively to a human but with an ‘accurate memory’ would probably illustrate the phenomenon of the ‘uncanny valley’. This refers to the revulsion humans typically experience when a robot is visually almost indistinguishable from a human being. Such robots are experienced as distinctly ‘creepy’.

The criterion seems somewhat divorced from consciousness as such. People with serious damage to the hippocampus (part of the brain associated with certain aspects of location and memory) typically cannot remember anything before a few minutes ago (though they can remember things before the injury occurred). Such people can nevertheless learn skills such as playing the piano but cannot remember actually learning to do so, though they are definitely conscious according to the people who meet them.

3. the ability to decide where to direct my focus.

This seems to postulate ‘free will’ although most modern philosophers consider this to be an illusion and argue that all our actions are determined by prior causes. This is the consequence of the predominance of the metaphysics of physicalism. So I suppose that the machine must simply say that it has this ability for it to be considered that it has it (just like humans are supposed to). The question of ‘free will’ is another thorny issue that requires several thousand words to summarise.

In so far as VisAw actually models the extrastriate cortex and produces something which seems to work in the way the cortex does, this is a big achievement. But the extrastriate cortex is concerned with vision, and although vision is arguably a central part of consciousness (tell that to a blind man) it is not the whole of it.

Neuroscientists have not come out and claimed that the centre of consciousness is in the extrastriate cortex. Some have identified the thalamus as the centre of consciousness, since most of the sensory nerves converge on it. Others have suggested B40 is the seat of the sense of self; B24 the seat of free will; B9, B10, B11 and B12 the centre of the executive functions. Others have said that consciousness is a function of the entire brain or even the whole body.

It is too much to require that tentative steps to what might become ‘machine consciousness’ should demonstrate the whole phenomenon immediately. But I suspect that even a partial demonstration is a way off.

The Turing Test suggested by Alan Turing in 1950 was to test whether a computer and a human being could be distinguished if the medium was only a text message. Turing estimated that a suitable program could be written in about 3000 man years by about the year 2000 and it would fool humans 70% of the time. [Turing 1950] By contrast the program for the NASA space shuttle took about 22,000 man years to develop and I have not yet heard a claim that the shuttle is conscious. Optimism is endemic in artificial intelligence research.

Arguably the program IDA [Franklin 2003] mentioned by Aleksander in his article has passed the Turing test in the very narrow domain of allocating sailors to new assignments by communicating in natural language by e-mail. IDA does not understand the communications of the sailors in the same way a human would: it matches the content of the incoming e-mail to one of a few dozen templates eg “please find job“. IDA would fail on general knowledge or anything outside its templates. Yet it is claimed to be conscious by ‘machine consciousness’ advocates.

As a tougher test for machine consciousness I propose the ‘Reid Test’. The Scottish Enlightenment philosopher Thomas Reid (1710-1796) defined common sense as “that degree of judgment which is common to men with whom we can converse and transact business.” (Reid 1785). Consciousness and common sense are not quite the same concept but I believe consciousness is a prerequisite for common sense. Anything that demonstrates common sense must be conscious, I think. I contend that consciousness requires the abilities to perceive (external objects in 3 dimensions and one’s location in the world), understand (words and abstract concepts), imagine (conceive novel situations), communicate (in some natural language) and act on the environment. Consciousness without emotion would seem paradoxical (the uncanny valley) for emotion reveals the degree of involvement of the subject with the object. Clearly the scope of these abilities would be limited in the initial stages of development of any conscious machine. In addition the machine must be open to internal inspection so that any ‘magic’ is exposed. History shows that humans can be deceived by determined trickery.

Animals other than humans show abilities in these domains to the point where we believe them to be conscious. For example dogs can remember the layout of their environment, understand a limited number of commands and the task at hand, imagine certain situations and behaviours (think sheep dogs), and bark and whine in appropriate places. They obviously display emotions. The behaviour of trained chimps and orang-utans with sign language is even more impressive. What we don’t have are scales of achievement in these domains that would allow us to measure the degree of consciousness. It seems to be an all-or-nothing attribute that is confused with and by other attributes such as intelligence, social context and trained response on the part of the animal, and by empathy on our part. Consciousness itself must be subject to more investigation before we can definitively ascribe consciousness to lower animals such as bees.

Developing a conscious machine requires a lot of effort in physics, computer science, psychology, neuroscience, philosophy and so on. It also requires a notable lack of arrogance not merely assertions. It also requires good luck in finding that metaphysical reality actually allows machine consciousness.

So to answer Aleksander’s query: Is Machine Consciousness fact or fiction?

For the moment and the forseeable future: fiction. But keep trying.

References

Block N (1994) Consciousness in A companion to Philosophy of Mind ed S Guttenplan, Blackwell

Churchland PM (1981) Eliminative materialism and the propositional attitudes Journal of philosophy vol 78 no 2 section 1

Damasio A (1999) The Feeling of what happens Heinemann p16

Franklin S (2003) IDA: A conscious artifact? Journal of Consciousness Studies vol 10 no 4-5 p47-66

Gibson JJ (1986) The Ecological Approach to Visual Perception Lawrence Erlbaum Associates

Guzeldere G ( 1995) Problems of Consciousness: a perspective on contemporary issues, current debates Journal Of Consciousness Studies vol2 no 2 p118

Huxley T (1912) Method and Results Macmillan : p240, p243 available at http://www.archive.org/details/methodresultsess00huxluoft

Leibniz G (1714) Monadologie sec 17 transl R Latta available at http://philosophy.eserver.org/leibniz-monadology.txt

Nagel T (1974) What is it like to be a bat? Philosophical Review vol 83, 4 p435 available at http://members.aol.com/NeoNoetics/Nagel_Bat.html

Reid T (1785) Essays on the Intellectual Powers of Man, Essay 6 Chapter 2 (p229) available at http://www.earlymoderntexts.com/pdfs/reid1785essay6_1.pdf

Ryle G (1949,2000) The Concept of Mind Penguin

Soon CS, Brass M, Heinze H-J & Haynes J-D (2008) Unconscious determinants of free decisions in the human brain. Nature Neuroscience 11, p543 – 545

Swartz Foundation (2001) Can a Machine be Conscious? available at http://www.theswartzfoundation.org/banbury_e.asp

Turing A (1950) Computing Machinery and Intelligence Mind 59 (236) p433-460 available http://www.loebner.net/Prizef/TuringArticle.html

Watson JB (1913) Psychology as the Behaviorist Views it. Psychological Review, vol 20, p158-177 available at http://psychclassics.yorku.ca/Watson/views.htm

Wegner D & Wheatley T (1999) Apparent Mental Causation Source of the Experience of Will American Psychologist vol 54 no 7 p480-492 available at http://www.wjh.harvard.edu/~wegner/pdfs/Wegner&Wheatley1999.pdf

Weizenbaum J (1976) Computer Power and Human Reason Penguin p188

[amazon_link asins=’B007JYFHVM,B007KNSB24,B008VTB6TQ,0631179534,0156010755,1848725787,0226732967,0140179119′ template=’ProductCarousel1′ store=’retthemin-21′ marketplace=’US’ link_id=’793863a7-4553-11e7-81d3-7314770c8f6c’]